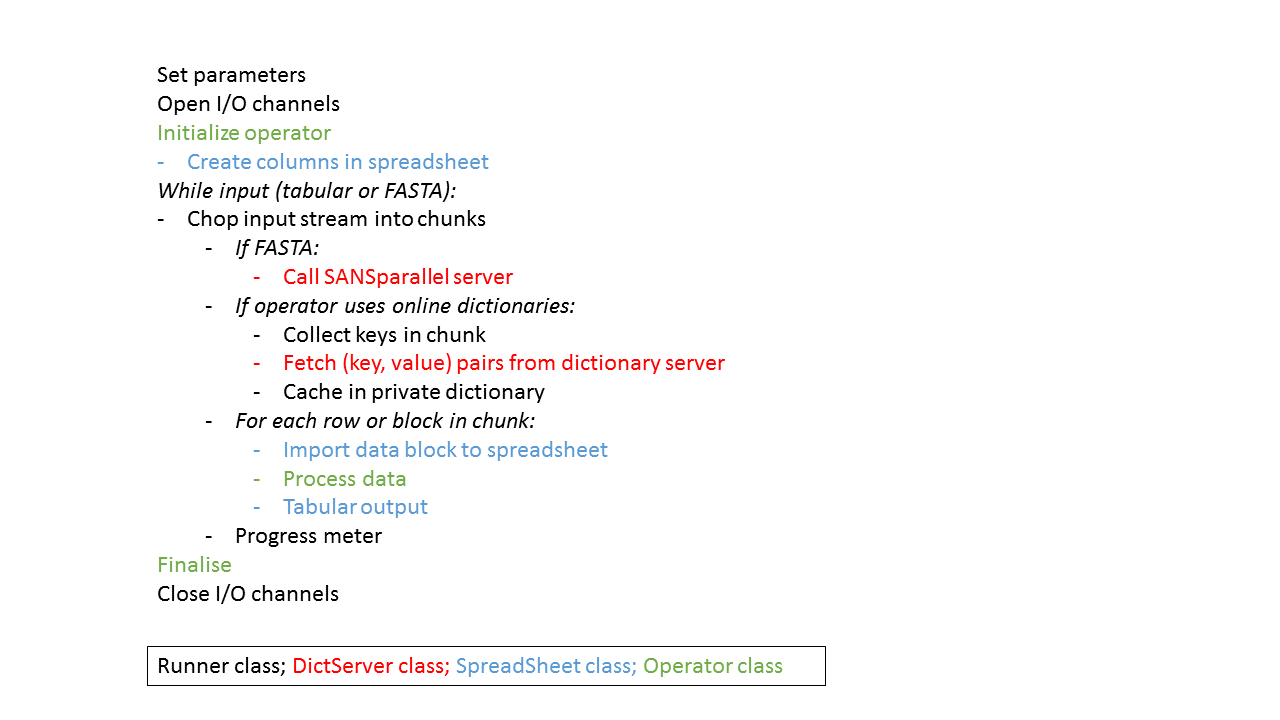

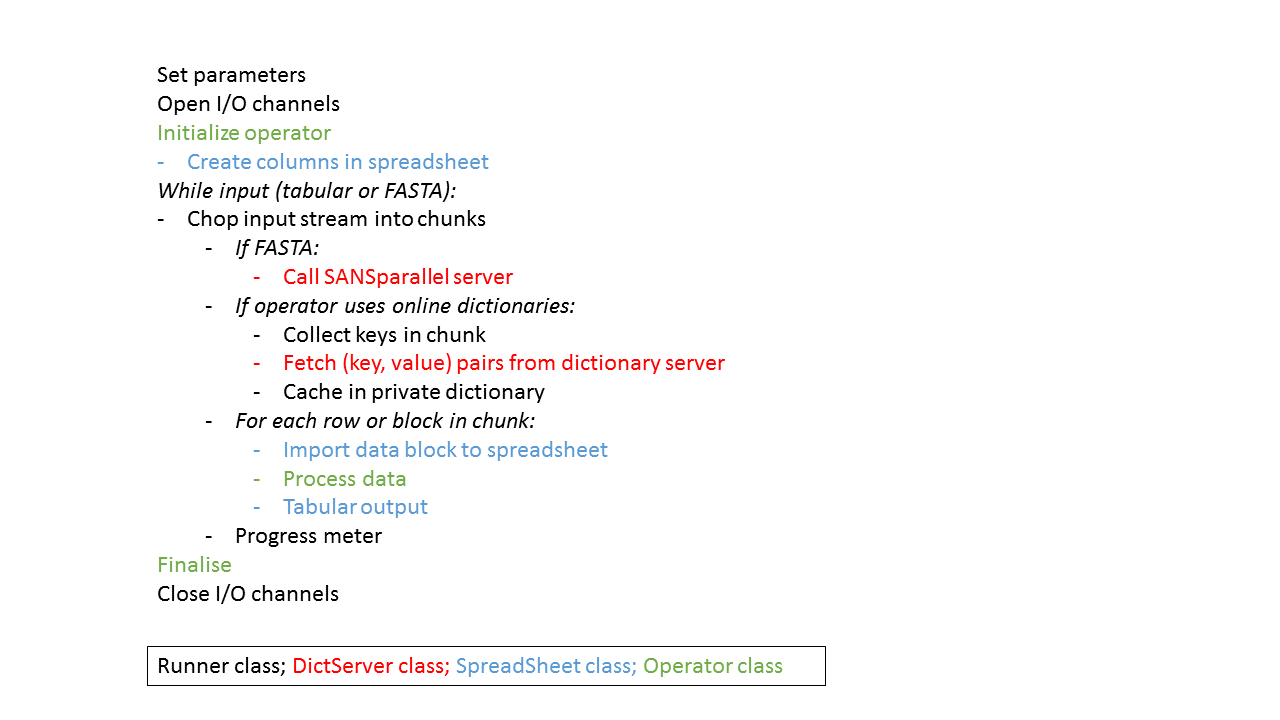

Figure 1. The main loop of SANSPANZ.

SANSPANZ provides access methods to two core servers: SANSparallel, for sequence similarity search, and the DictServer, a key/value store of metadata related to protein annotations (GO annotations, GO class frequencies, description line word frequencies, species taxonomy, etc). SANSPANZ client scripts access the servers via TCP (local servers) or HTTP protocols (remote servers). The remote servers are maintained by the authors (Holm group).

SANSPANZ accepts query sequences in FASTA format or tabular format similar to BLAST output format 6. The main loop breaks the input data into batches and, in the case of raw protein sequences, calls the SANSparallel server to get the sequence neighbourhood for each query. For all database hits, the DictServer is called to retrieve annotation metadata.

Data processing is based around a two-dimensional data matrix containing individuals (rows) and attributes (columns). Columns and rows can be appended to SpreadSheets by classes that inherit from the Operator class. Operators are defined as either row-wise (per database hit) or block-wise (per input query) depending on the nature of the statistic being calculated. Within SANSPANZ, all data processing is performed by Operators which can even be chained together to create composite Operators.

The idea behind the SANSPANZ framework is that new functionality can be developed by simply writing a new operator class, which is called from the standard workflow (Figure 1).

| Parameters | Defines the workspace with spreadsheets, dictionaries, and parameters |

|---|---|

| Runner | The main loop handling the input data stream |

| DictServer | Handles communications with the core servers |

| SpreadSheet | A data object |

| operators | Subclassed from myoperator. Modifies the contents of spreadsheets. The only part a developer needs to touch. |

The operators can call utility functions including the following modules:

| XMLParser | Specific function to parse SANSparallel results |

|---|---|

| HYGE | Functions for calculating hypergeometric statistics |

| GSZ | Functions for calculating the Gene Set Z-score statistic |

| Read_and_Print | Functions for loading dictionaries from files |

| PannzerFunctions | Specific functions used by the Pannzer predictor. |

| GO_dist_functions | A collection of GO distance related functions. |

Figure 1. The main loop of SANSPANZ.

import Parameters, Runner

if __name__ == '__main__' :

glob=Parameters.WorkSpace(configFile=None)

z=Runner.Runner(glob,operator_name=glob.param['input_OPERATOR'],CHUNK=glob.param['input_CHUNK'], liveData=glob.param['input_LIVEDATA'])

z.lazyRunner(glob.param['input_FILE'], glob.param['input_OUTFILES'].split(","), queryspecies=glob.param['input_QUERYSPECIES'], colnames=glob.param['input_COLNAMES'], block_column_name=glob.param['input_BLOCK_

COLUMN_NAME'], input_format=glob.param['input_FORMAT'])

The customized script below uses hardcoded parameters to execute the Pannzer method of function prediction starting from a set of sequences in FASTA format. On line 4, we make sure that the SANSparallel server and DictServer will be accessed remotely. The query species is defined using the -s option on the command line.

import Parameters, Runner

if __name__ == '__main__' :

glob=Parameters.WorkSpace()

glob.param['CONN_REMOTE']=True

z=Runner.Runner(glob,operator_name='Pannzer')

z.lazyRunner("--",[None, "DE.out", "GO.out", "anno.out"],queryspecies=glob.param['input_QUERYSPECIES'])

The lazyRunner method has a number of qualifiers that can be used to pass on information about the structure of the input data.

As said above, the defult input format is FASTA formatted sequences (input_format="FASTA").

The other alternative of input format is tabular data (input_format="tab").

Raw sequences are automatically sent to the SANSparallel server to get the sequence neighbourhood for each query and converted to a tabular format.

Tabular data is assumed to have column names on the first row (comment lines starting with '#' are ignored). The column separator is a tab. If the input data does not have a header row, you can define column names using the colnames qualifier. It is a string of concatenated column names, separated by spaces. For example, GO predictions could have three columns defined by colnames="qpid goid score". Column order is free, as the operators identify data columns by the header string.

Depending on the nature of the operator, the input data stream can be grouped by the variable defined by block_column_name. The default is 'qpid' (query protein identifier) when the data is a list of sequence neighbours of the query protein.

Finally, lazyRunner can be given the queryspecies qualifier if the query species is not defined in the input data. The species information is used by Pannzer.

python /data/liisa/PANNZER2/runsanspanz.py -m taxonassignment -i example_input.fasta -o ',--' 2> /dev/nullThis is the input:

>scaffold100_0M.1052_C SIZE=0 RANGE=448350-449212 (+) RNA-directed DNA polymerase YFKRIIHHNQVGFIPGLQGWFNILKSINVIQYINKRKNKNHMILSIDAEKAFDNVQHPFLIKTLQSVGIEGTYLNIIKAIYEKPTANIILNGEKLRAFPLRSGTWQGCPLSPLLFNIVLEVLVTAIRQQKIKGIIGKEEVKLSLFADDMILYVENPKDSTPKLELIQEFSKVAGYKFNAQKSIAFIYTNNKTEEREIKESIPFTIAPKTIR YLGINLTKEAKNLYCENYKILMKAIEEDTKKWKNVPCLWIGRTNIVRMSMLPRAIYTFNEILSKYHQHFSKKWN >scaffold100_0M.1321_A SIZE=0 RANGE=497083-497991 (+) Endonuclease (Fragment) KNIHIDQWHRIESPDMDPQLYGQLIFSKAGKNIQWKKDSLFNKWCWENWTSIYRRMKLNHSLTPYTKINSKWMKDLNVRQEAIKVLEENIDSNLLDIGHSNFFQDMSPKARETKVKMNFWDFIKIKSFCTAKETVNKTKRQPMEWEKIFANDTTDKGLVSKIYKELLKLNTQKTNNQVKKWAEDMNRHFSEEDIQMANRHMKKCSSSLAIR EIQIKTTLRYHLTPVRMAETDKARNNKCWRGCGERGTLLHCWWECKLVPLWKTVWSFLKKLKIELLYDPAIALLGIYPKDSDVVKRRAICTThis is the output (the numbers may change with database updates):

qpid taxon count total frequency rank scaffold100_0M.1052_C Eukaryota; Metazoa 186 195 0.953846153846 1 scaffold100_0M.1321_A Eukaryota; Metazoa 208 220 0.945454545455 1

1:from myoperator import RowOperator

2:import sys

3:

4:# Clone a rowwise operator. Class name and file name (operators/classname.py) must be identical.

5:class taxon(RowOperator) :

6: """Extract taxon from lineage to taxon_depth."""

7: def __init__(self,glob):

8: # define nicknames for column indices

9: [self.lineage_col, self.taxon_col]=glob.use_sheet("data").use_columns(["lineage","taxon"])

10: self.taxon_depth=int(glob.param['TAXON_DEPTH'])

11:

12: def process(self, row):

13: x=row[self.lineage_col]

15: tmp=x.split(";")

16: if len(tmp) > self.taxon_depth:

17: row[self.taxon_col]=";".join(tmp[0:self.taxon_depth])

18: else:

19: row[self.taxon_col]=";".join(tmp)

1: import sys

2: from myoperator import RowOperator

3:

4: # Clone a rowwise operator. Class name and file name (operators/classname.py) must be identical.

5: class lineage(RowOperator) :

6: """Do a dictionary lookup for the taxonomic lineage of a species."""

7: def __init__(self,glob):

8: self.glob=glob

9: # define nicknames for column indices

10: [self.species_col, self.lineage_col]=self.glob.use_sheet("data").use_columns(["species","lineage"])

11: # use online dictionary. Object handles in glob are hardcoded

12: self.glob.use_online_dictionaries(["LINEAGE"])

13:

14: def process(self,row):

15: species=row[self.species_col]

16: row[self.lineage_col]=self.get_lineage(species)

17:

18: def get_lineage(self,species):

19: ucspecies=species.upper() # lineage keys are UPPERCASE species

20: if ucspecies in self.glob.lineage: return(self.glob.lineage[ucspecies])

21: if species != "unknown": sys.stderr.write("# Warning: unknown species ||%s||\n" %species)

22: return("unclassified")

Dictionaries can be online or local. We use online dictionaries to save memory and loading time. The DictServer.lookup_key_values() method queries an online dictionary with a (table, key) tuple and it returns a (table, key, value) tuple.

Runner.lazyRunner caches data for all dictionaries below, because the same keys are likely to recur frequently in lists of homologous proteins. For LINEAGE, keys are taken from column 'species'. For GODICT, keys are taken from column 'spid' or 'qpid'. For DESCCOUNT and WORDCOUNT, keys are taken from column 'desc'. GOIDELIC downloads a copy of the entire GO structure (about 44,000 GO terms). Object handles to the cached dictionaries are defined in glob and fixed.

The available online dictionaries are the following. All keys must be uppercase.

| Subscribe to | Table name | Key | Value | Object handle to cached data |

| LINEAGE | LINEAGE | species scientific name | Taxonomic lineage of species | glob.lineage |

| TAXID | TAXID | species scientific name | NCBI numeric identifier of taxon | glob.taxid |

| WORDCOUNT | WORDCOUNT | word | Number of descriptions in uniprot that contain word | glob.wordcounts |

| WORDCOUNT | NWORDTOTAL | None | Total number of unique words (cleaned) in uniprot DE-lines | glob.nwordtotal (constant) |

| DESCCOUNT | DESCCOUNT | uniprot description | Number of identical descriptions in uniprot | glob.desccounts |

| DESCCOUNT | NPROT | None | NoneTotal number of protein entries in uniprot | glob.nprot (constant) |

| GODICT | GODICT | uniprot accession | GO identifiers assigned to uniprot entry by GOA | glob.GOdict |

| GOIDELIC | GOCOUNTS | GO identifier | Total number of uniprot entries assigned to GO term (propagated to parents) | glob.GOcounts |

| GOIDELIC | GOPARENTS | GO identifier | GO identifiers of parental line | glob.GOparents |

| GOIDELIC | GODESC | GO identifier | Short description of GO term | glob.godesc |

| GOIDELIC | ONTOLOGY | GO identifier | BP, MF or CC | glob.ontology |

| GOIDELIC | IC | GO identifier | Information content of GO term | glob.IC |

| GOIDELIC | EC | GO identifier | Inverse of ec2go | glob.EC |

| GOIDELIC | KEGG | GO identifier | Inverse of kegg2go | glob.KEGG |

You can naturally also load your own dictionary from a file using, for example, Read_and_Print.read_dict_data(). The data should be tab-separated (key,value) pairs. Attach your dictionary to the workspace object (here: glob) if it needs to be visible to other operators.

1:from myoperator import BlockOperator

2:import operator

3:

4:# Clone a blockwise operator. Class name and file name (operators/classname.py) must be identical.

5:class taxonassignment(BlockOperator) :

6: """Outputs most frequent taxon per block."""

7: def __init__(self,glob, maxrank=1):

8: # parameters used by self.process()

9: self.glob=glob

10: self.maxrank=maxrank

11: # define private object handle to spreadsheets (glob maps handles by name)

12: [self.data,self.summary_data]=glob.use_sheets(["data","summary"])

13: # define nicknames for column indices

14: [self.qpid_col1,self.isquery_col,self.taxon_col1]=self.data.use_columns(["qpid","isquery","taxon"])

15: self.summary_data.use_columns(["qpid","taxon","count","total","frequency","rank"])

16: # this is a composite operator

17: [self.a,self.b]=glob.use_operators(['lineage','taxon'])

18:

19: def process(self, block):

20: # call preprocessing operators

21: for row in block:

22: self.a.process(row) # add lineage

23: self.b.process(row) # add taxon

24: # get query

25: qpid='unknown'

26: for row in block:

27: if row[self.isquery_col]=="1": qpid=row[self.qpid_col1] # string comparison

28: # compute sum statistics

29: n=0

30: cnt={}

31: for row in block:

32: n+=1

33: taxon=row[self.taxon_col1]

34: if not taxon in cnt: cnt[taxon]=0

35: cnt[taxon]+=1

36: # reverse sort by counts

37: sorted_cnt = sorted(cnt.items(), key=operator.itemgetter(1), reverse=True)

38: # save top ranks in summary table

39: rank=0

40: for (taxon,cnt) in sorted_cnt:

41: rank+=1

42: freq=0

43: if n>0: freq=cnt/float(n)

44: # assume fixed column order

45: datarow=[qpid,taxon,str(cnt),str(n),str(freq),str(rank)]

46: self.summary_data.append_row(datarow)

47: if rank>=self.maxrank: break

def SANS_score(self,goid):

"Frequency of GO classes in sequence neighborhood. Returns (raw score, PPV)."

obs=float(self.goclasscount[goid])/self.sampleSize

ppv=obs

return(obs,ppv)

Scoring functions can be specified by name from the command line (--PANZ_PREDICTOR, --eval_SCOREFUNCTIONS), and the RM3.process method will then execute the appropriate xxx_score function.

python runsanspanz.py -m gaf2propagated -i testset_truth.gaf -f tab -c "qpid goid" -o ",testset_truth_propagated"Below, we save the result from homology search for reuse later with different prediction parameters. Scoring functions for prediction and evaluation are selected with the --PANZ_PREDICTOR and --eval_SCOREFUNCTIONS arguments.

python runsanspanz.py -m SANS -f FASTA -i testset.fasta -o testset.fasta.sans python runsanspanz.py -m Pannzer -f tab -i testset.fasta.sans --PANZ_PREDICTOR "RM3,ARGOT,JAC,HYGE,SANS" -o ',,testset.fasta.sans.GO,' python runsanspanz.py -m GOevaluation -f tab -i testset.fasta.sans.GO --eval_TRUTH testset_truth_propagated --eval_SCOREFUNCTIONS "RM3_PPV ARGOT_PPV JAC_PPV HYGE_PPV SANS_PPV" -o ',,testset.fasta.sans.GO.eval'

import Parameters, Runner

if __name__ == '__main__' :

glob=Parameters.WorkSpace()

z=Runner.Runner(glob, operator_name='myfavourite')

z.lazyRunner("--", ["--"],input_format="tab",colnames="col1 col2")

from myoperator import BlockOperator class myfavourite(BlockOperator):or

from myoperator import RowOperator class myfavourite(RowOperator):(The type TextOperator was created as an afterthought, it bypasses the main loop and passes each line of the input stream directly to the operator's process() function. operators/obo.py is a TextOperator that parses the GO hierarchy out of an OBO file.)

The __init__ method is called with a Parameters.WorkSpace() object handle, for example:

def __init__(self,glob):The __init__ method should define:

[self.data,self.summary_data]=glob.use_sheets(["data","summary"])or

self.data=glob.use_sheet("data")

[self.qpid_col1,self.isquery_col,self.taxon_col1]=self.data.use_columns(["qpid","isquery","taxon"]) [self.qpid_col2,self.taxon_col2,self.count_col,self.total_col,self.freq_col,self.rank_col]=self.summary_data.use_columns(["qpid","taxon","count","total","frequency","rank"])or

[self.lineage_col, self.taxon_col]=glob.use_sheet("data").use_columns(["lineage","taxon"])

glob.use_online_dictionaries(["LINEAGE","WORDCOUNT","DESCCOUNT","GOIDELIC","GODICT"])or

glob.load_dictionaryname()where dictionaryname is one of lineage, wordcounts, desccounts, GOdict, GOdict_noIEA, goidelic, nprot or nwordtotal. The respective data file names are defined by parameters in glob.param. Small private dictionaries can be loaded from a file using the function Read_and_Print.read_dict_data()

[self.a,self.b]=glob.use_operators(['lineage','taxon'])

def process(self, block):or

def process(self, row):

The process() method writes new values to the cells of the spreadsheet objects, and this is the part that is entirely up to you. The Runner object (see main application script) takes automatically care of all input and output.

The operator class can also have a finalise() method which is called after the main loop has finished. This can for example print out the total number of queries that have been processed.

-m Pannzer --PANZ_PREDICTOR NEWFUNCon the command line. You can include several scoring functions in one Pannzer run.

-m gaf2propagated -i mytruth.gaf -o ",mytruth"on the command line.

-m GOevaluation --eval_SCOREFUNCTIONS NEWFUNC --eval_TRUTH mytruthon the command line. You can include several scoring functions in one GOevaluation run.